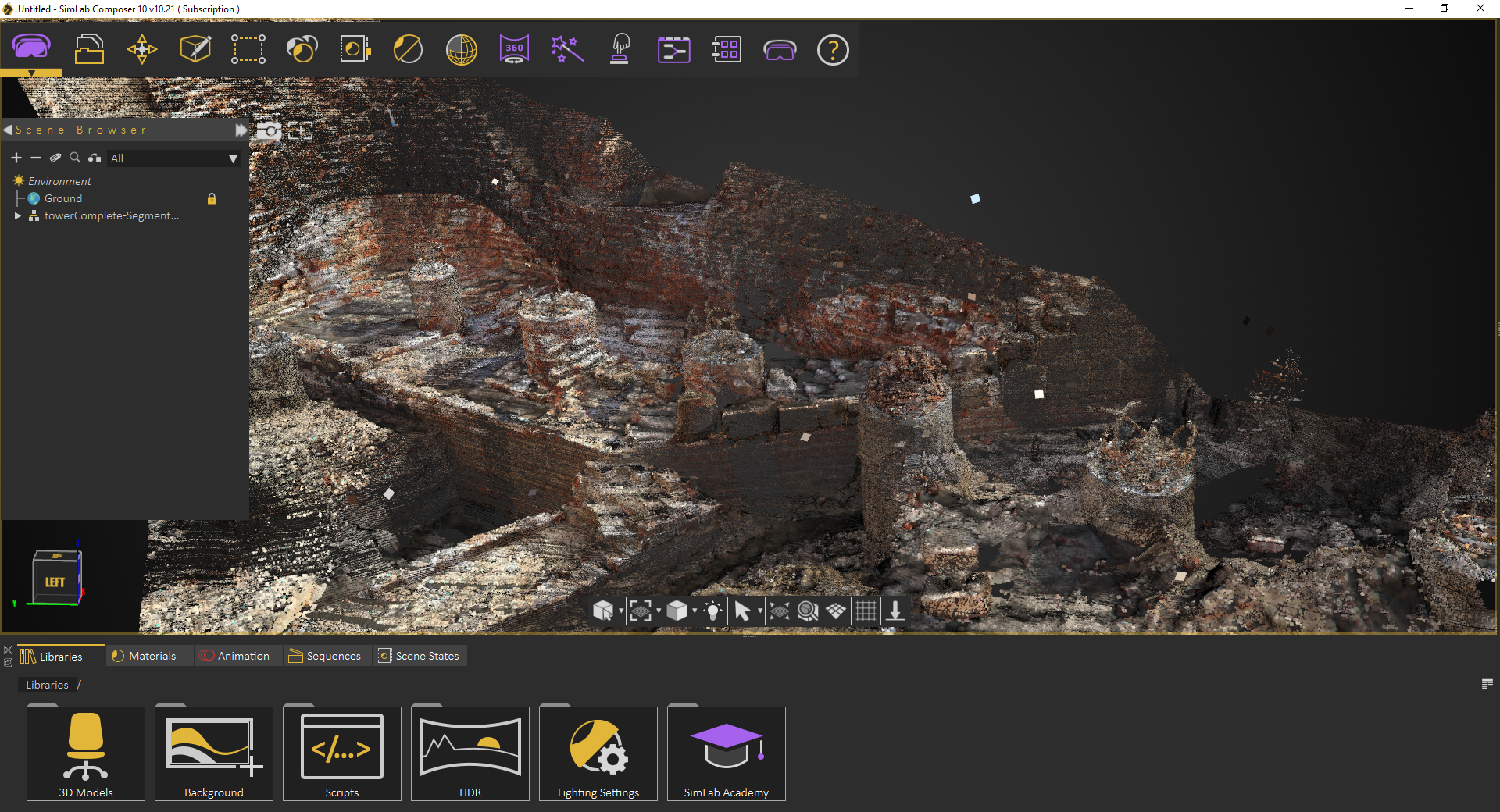

For the detection task, we use the annotated bounding boxes and fit a Gaussian to the box, with center at the box centroid, and standard deviations proportional to the box size. This is optional if there is no shape annotation in training data. The shape task predicts the object’s shape signals depending on what ground truth annotation is available, e.g. We employ a multi-task learning approach, jointly predicting an object’s shape with detection and regression. Our single-stage pipeline is illustrated by the diagram in Fig 6, the model backbone has an encoder-decoder architecture, built upon MobileNetv2.

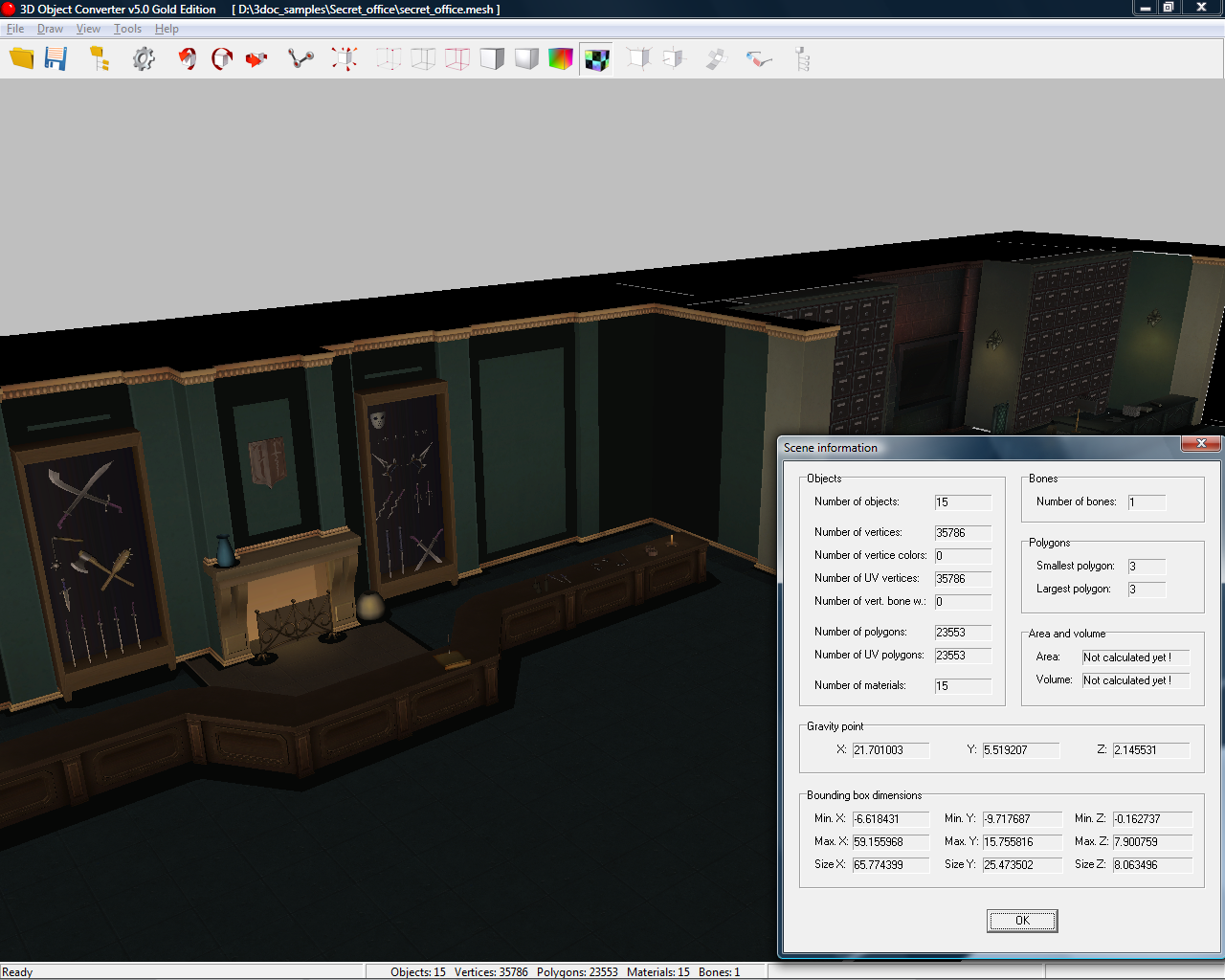

Network architecture and post-processing for single-stage 3D object detection. By combining real-world data and AR synthetic data, we are able to increase the accuracy by about 10%.įig 6. This approach results in high-quality synthetic data with rendered objects that respect the scene geometry and fit seamlessly into real backgrounds. Our novel approach, called AR Synthetic Data Generation, places virtual objects into scenes that have AR session data, which allows us to leverage camera poses, detected planar surfaces, and estimated lighting to generate placements that are physically probable and with lighting that matches the scene. However, attempts to do so often yield poor, unrealistic data or, in the case of photorealistic rendering, require significant effort and compute. (Left) Projections of annotated 3D bounding boxes are overlaid on top of video frames making it easy to validate the annotation.Ī popular approach is to complement real-world data with synthetic data in order to increase the accuracy of prediction. (Right) 3D bounding boxes are annotated in the 3D world with detected surfaces and point clouds. Real-world data annotation for 3D object detection.

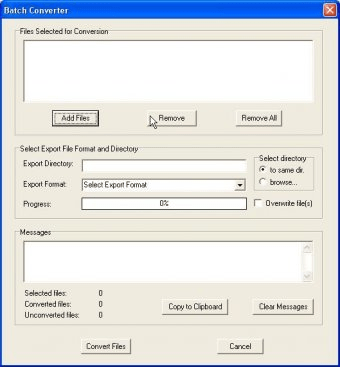

For static objects, we only need to annotate an object in a single frame and propagate its location to all frames using the ground truth camera pose information from the AR session data, which makes the procedure highly efficient.įig 3. Annotators draw 3D bounding boxes in the 3D view, and verify its location by reviewing the projections in 2D video frames. This tool uses a split-screen view to display 2D video frames on which are overlaid 3D bounding boxes on the left, alongside a view showing 3D point clouds, camera positions and detected planes on the right.

In order to label ground truth data, we built a novel annotation tool for use with AR session data, which allows annotators to quickly label 3D bounding boxes for objects. With the arrival of ARCore and ARKit, hundreds of millions of smartphones now have AR capabilities and the ability to capture additional information during an AR session, including the camera pose, sparse 3D point clouds, estimated lighting, and planar surfaces.

To overcome this problem, we developed a novel data pipeline using mobile augmented reality (AR) session data. While there are ample amounts of 3D data for street scenes, due to the popularity of research into self-driving cars that rely on 3D capture sensors like LIDAR, datasets with ground truth 3D annotations for more granular everyday objects are extremely limited. This site uses Just the Docs, a documentation theme for Jekyll.

0 kommentar(er)

0 kommentar(er)